Measuring Cooperation and Reciprocity

From Evolution and Games

(→Measuring reciprocity) |

(→Graphic interpretation) |

||

| (15 intermediate revisions not shown) | |||

| Line 1: | Line 1: | ||

[[Category:Repeated Games]] | [[Category:Repeated Games]] | ||

| - | Here we formally define | + | Here we formally define measures for cooperation and reciprocity. |

| - | + | = Introduction = | |

There are two reasons why we would like to have measures for cooperativeness and reciprocity. The first and most important reason is obvious; we would simply like to know how cooperative, and how reciprocal, strategies are during a run. The second reason is that these measures may serve as an indication of indirect invasions. Typically an indirect invasion is characterized by a change in reciprocity followed by a change in cooperativeness. | There are two reasons why we would like to have measures for cooperativeness and reciprocity. The first and most important reason is obvious; we would simply like to know how cooperative, and how reciprocal, strategies are during a run. The second reason is that these measures may serve as an indication of indirect invasions. Typically an indirect invasion is characterized by a change in reciprocity followed by a change in cooperativeness. | ||

| - | + | = Measuring cooperation = | |

Any measure of cooperativeness will have to weigh the different histories, and as we will see, every choice how to weigh them has appealing properties | Any measure of cooperativeness will have to weigh the different histories, and as we will see, every choice how to weigh them has appealing properties | ||

| Line 36: | Line 36: | ||

The overall cooperativeness of a strategy <math>S</math> can then be defined as the cooperativeness at the beginning, where we have the empty history; <math>C(S) =C(S,\overline{h}_{1})</math>. | The overall cooperativeness of a strategy <math>S</math> can then be defined as the cooperativeness at the beginning, where we have the empty history; <math>C(S) =C(S,\overline{h}_{1})</math>. | ||

| - | + | == Graphic interpretation == | |

| Line 44: | Line 44: | ||

<gallery> | <gallery> | ||

File:AlwaysCooperate.png | Always Cooperate | File:AlwaysCooperate.png | Always Cooperate | ||

| + | File:AlwaysCooperateFSA.png | ||

File:AlwaysDefect.png | Always Defect | File:AlwaysDefect.png | Always Defect | ||

| + | File:AlwaysDefectFSA.png | ||

File:FirstMove.png | Condition on first move | File:FirstMove.png | Condition on first move | ||

| + | File:ConditionOnFirstMoveFSA.png | ||

File:GrimTrigger.png | Grim Trigger | File:GrimTrigger.png | Grim Trigger | ||

| + | File:GrimTriggerFSA.png | ||

File:NegativeHandshake.png | Negative Handshake (DTFT) | File:NegativeHandshake.png | Negative Handshake (DTFT) | ||

| + | File:NegativeHandshakeFSA.png | ||

File:TitForTat.png |Tit for Tat | File:TitForTat.png |Tit for Tat | ||

| + | File:TitForTatFSA.png | ||

File:TatForTit.png | Tat for Tit | File:TatForTit.png | Tat for Tit | ||

| + | File:TatForTitFSA.png | ||

File:TitForTwoTats.png | Tit for two Tats | File:TitForTwoTats.png | Tit for two Tats | ||

| + | File:TitForTwoTatsFSA.png | ||

File:Wsls.png | Win stay lose shift | File:Wsls.png | Win stay lose shift | ||

| + | File:WslsFSA.png | ||

</gallery> | </gallery> | ||

</center> | </center> | ||

| - | == | + | == Measure of cooperation for well-known strategies == |

It is obvious that <math>C(AllC) = 1</math> and that <math>C(AllD) = 0</math>. A strategy that starts with cooperation, and further conditions play on the first move entirely has cooperativeness <math>1-\frac{\delta }{2}</math>. This is sensible; if <math>\delta =0</math>, then the first move is the | It is obvious that <math>C(AllC) = 1</math> and that <math>C(AllD) = 0</math>. A strategy that starts with cooperation, and further conditions play on the first move entirely has cooperativeness <math>1-\frac{\delta }{2}</math>. This is sensible; if <math>\delta =0</math>, then the first move is the | ||

| Line 76: | Line 85: | ||

which is <math>1</math> at <math>\delta =0</math> for similar reasons, and goes to <math>0</math> if <math>\delta</math> goes to <math>1</math>. | which is <math>1</math> at <math>\delta =0</math> for similar reasons, and goes to <math>0</math> if <math>\delta</math> goes to <math>1</math>. | ||

| - | + | = Measuring reciprocity = | |

A measure for reciprocity can be constructed by comparing how much the cooperativeness of strategy <math>S</math> is changed if its opponent plays <math>D</math> rather than <math>C</math>. Again, histories of the same length are weighted equally here. | A measure for reciprocity can be constructed by comparing how much the cooperativeness of strategy <math>S</math> is changed if its opponent plays <math>D</math> rather than <math>C</math>. Again, histories of the same length are weighted equally here. | ||

<math>R(S) =\sum_{t=1}^{\infty} \sum_{\overline{h}\in \overline{H}_{t}} (\frac{\delta }{2}) ^{t-1} [ C(S,(\overline{h},C)) -C(S,(\overline{h},D))] </math> | <math>R(S) =\sum_{t=1}^{\infty} \sum_{\overline{h}\in \overline{H}_{t}} (\frac{\delta }{2}) ^{t-1} [ C(S,(\overline{h},C)) -C(S,(\overline{h},D))] </math> | ||

| + | |||

| + | In the figure above, this is visualized as the difference in blueness below two neighbouring bits that share their history up to the one before last period. | ||

| + | |||

| + | == Measure of reciprocity for well-known strategies == | ||

| + | |||

| + | Simple calculations now give that <math>R(AllC) =R(AllD) =0</math> and <math>R(TFT) =1</math>. Also, if we look at a strategy that only conditions on the first move and that defects forever if the first move of the other was <math>D</math>, and cooperates forever if the first move of the other was <math>C</math>, this strategy also has reciprocity <math>1</math>. It is not hard to see that <math>-1</math> and <math>+1</math> are in fact the lower and upper bounds for reciprocity with this equal weighing of all strategies of the same length. | ||

| + | |||

| + | Likewise, | ||

| + | |||

| + | <math>R(GRIM) =\sum_{t=1}^{\infty }(\frac{\delta }{2}) ^{t-1}[ \frac{2-2\delta }{2-\delta }-0] =\frac{4(1-\delta) }{(2-\delta) ^{2}} =\frac{4( 1-\delta) }{( 2-\delta) ^{2}}</math>. | ||

| + | |||

| + | <math>R(TFT)=\sum_{t=1}^{\infty} \sum ( \frac{\delta }{2}) ^{t-1} [\frac{1}{2}+\frac{1}{2}( 1-\delta) -( \frac{1}{2}-\frac{1}{2}( 1-\delta) )] 2^{t-1}=\sum_{t=1}^{\infty }\delta ^{t-1}(1-\delta) =1</math> | ||

| + | |||

| + | |||

| + | Note that here the reciprocity of Grim Trigger is lower than that of <math>TFT</math>, which is due to the fact that for many histories <math>GRIM</math> will only play a sequence of <math>D</math>'s either way. | ||

| + | |||

| + | = Density-dependent measures = | ||

| + | |||

| + | Alternatively we could measure the cooperativeness and reciprocity of a strategy given the population it is in. In that case, we should not weight all histories of a given length equally, but in the proportions in which they do occur given the actual population of strategies. Hence the weight <math>(1/2)^{t-1}</math>, which is one divided by the number of strategies in <math>H_{t}</math>, will then be replaced by their actual proportions. For instance, in a population that consists of any mixture of <math>AllC</math>, <math>TFT</math> and <math>GRIM</math>, the only history at time <math>t</math> that occurs, is a sequences of <math>t-1</math> consecutive <math>C'</math>s. The measure for cooperativeness then simply becomes the expected times a strategy plays <math>C</math> divided by the expected number of rounds. | ||

| + | |||

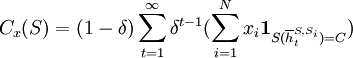

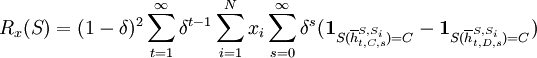

| + | Suppose the population is given by a vector of frequencies <math>x=[x_{1},...,x_{N}]</math> where <math>x_{i}</math> is the frequency of strategy <math>S_{i}</math>. Then we define the population-dependent cooperativeness of a strategy <math>S</math> as follows: | ||

| + | |||

| + | <math>C_{x}(S) =( 1-\delta) \sum_{t=1}^{\infty}\delta ^{t-1}(\sum_{i=1}^{N}x_{i}\mathbf{1}_{{ S(\overline{h}_{t}^{S,S_{i}}) =C}}) </math> | ||

| + | |||

| + | In a population with only <math>AllC</math>, <math>TFT</math> and <math>GRIM</math>, cooperativeness of all these three strategies is <math>1</math>. In an infinitely large population of <math>\alpha TFT</math> and <math>(1-\alpha) AllD</math>, cooperativeness of <math>TFT</math> is <math>1-\delta +\delta \alpha</math> . | ||

| + | |||

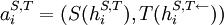

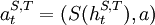

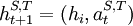

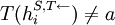

| + | A reasonable way of measuring reciprocity is to compare actual histories with histories that would have unfolded after one-step deviations. So let <math>h_{t,a,s}^{S,T}</math> be the history that for the first <math>t-1</math> steps unfolds recurcively between strategy <math>S</math> and <math>T</math> - as above; | ||

| + | |||

| + | <math>h_{1}^{S,T}=()</math> | ||

| + | |||

| + | and the recursion step | ||

| + | |||

| + | <math>a_{i}^{S,T}=(S(h_{i}^{S,T}) ,T(h_{i}^{S,T\leftarrow}))</math> | ||

| + | |||

| + | <math>h_{i+1}^{S,T}=( h_{i},a_{i}^{S,T}) ,\qquad ~\qquad \qquad \qquad \qquad i=1,2,...,t-1</math>. | ||

| + | |||

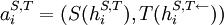

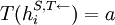

| + | Only at time <math>t</math> the opponent plays <math>a</math>, while strategy <math>S</math> does not deviate and plays <math>S(h_{t}^{S,T})</math>; | ||

| + | |||

| + | <math>a_{t}^{S,T}=(S( h_{t}^{S,T}) ,a)</math> | ||

| + | |||

| + | <math>h_{t+1}^{S,T}=( h_{i},a_{t}^{S,T})</math>. | ||

| + | |||

| + | After that we go back to the normal recursion step | ||

| + | |||

| + | <math>a_{i}^{S,T}=(S( h_{i}^{S,T}) ,T(h_{i}^{S,T\leftarrow}))</math> | ||

| + | |||

| + | <math>h_{i+1}^{S,T}=(h_{i},a_{i}^{S,T}) ,\qquad ~\qquad \qquad \qquad \qquad i=t+1,...t+s</math> | ||

| + | |||

| + | If <math>T(h_{i}^{S,T\leftarrow }) =a</math>, then this is <math>h_{t,a,s}^{S,T}</math> is just the actual history <math>h_{t+1+s}^{S,T}</math>, but if <math>T(h_{i}^{S,T\leftarrow }) \neq a</math>, it is a counterfactual history after a one-step deviation. The history <math>\overline{h}_{t,a,s}^{S,T}</math>, as above, just gives the actions of player <math>T</math> and ignores those of player <math>S</math>. | ||

| + | |||

| + | With this definition, we can make a measure for reciprocity as follows: | ||

| + | |||

| + | <math>R_{x}(S) =(1-\delta)^{2} \sum_{t=1}^{\infty }\delta^{t-1}\sum_{i=1}^{N}x_{i}\sum_{s=0}^{\infty}\delta^{s}(\mathbf{1}_{{S(\overline{h}_{t,C,s}^{S,S_{i}}) =C} }-\mathbf{1}_{{S(\overline{h}_{t,D,s}^{S,S_{i}}) =C}})</math> | ||

| + | |||

| + | |||

| + | In a population of <math>GRIM</math> only, it is relatively easy to see that the reciprocity of <math>GRIM</math> is <math>1</math>; the path of play between <math>GRIM</math> and itself is just a sequence of <math>C</math>'s, while after a deviation <math>GRIM</math> just plays a sequence of <math>D</math>'s. So at any time <math>t</math>, the discounted difference between these sequence from then on, normalised by multiplying by one of the <math>t(1-\delta)</math>'s, is <math>1</math>. So discounting over <math>t</math> and normalizing by the other <math>( 1-\delta)</math> gives a reciprocity measure of <math>1</math>. | ||

| + | |||

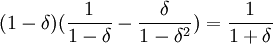

| + | In a population of <math>TFT</math> only, the reciprocity of <math>TFT</math> is <math>\frac{1}{1+\delta }</math>. Again, the path of play between <math>TFT</math> and itself is a sequence of <math>C</math>'s, but now, on the path after any deviation, <math>TFT</math> plays a sequence of alternating <math>D</math>'s and <math>C</math>'s. So at any time <math>t</math>, the discounted and normalized difference between them is <math>(1-\delta) ( \frac{1}{1-\delta }-\frac{\delta }{1-\delta ^{2}}) =\frac{1}{1+\delta }</math>. So discounting over <math>t</math> and normalizing by the other <math>(1-\delta)</math> gives the same number. | ||

| + | |||

| + | This comparison is in line with what we might want from a reciprocity measure that depends on the actual population; at any stage, the threat that grim trigger poses to itself is maximal, and larger than the threat <math>TFT</math> poses to itself. | ||

| + | |||

| + | Another comparison shows that this measure also picks up the fact that, if the punishment in Grim Trigger is in fact triggered on the path of play, then on the remainder of the path, <math>GRIM</math> is actually not reciprocal at all anymore. With <math>TFT</math> on the other hand, reciprocity actually remains the same, whether the punishment has been triggered in the past or not. This is reflected in the following example. | ||

| + | |||

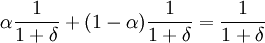

| + | In a population of <math>\alpha TitForTat</math> and <math>(1-\alpha) TatForTit</math>, the reciprocity of <math>TitForTat</math> is <math>\alpha \frac{1}{1+\delta }+( 1-\alpha) \frac{1}{1+\delta }=\frac{1}{1+\delta }</math>; the reciprocity of <math>TitForTat</math> against itself was computed above, and for the computation of <math>TitForTat</math> against <math>TatForTit</math> it is enough to realize that the comparison between actual and counterfactual is between <math>DCDC...</math> versus <math>CCCC...</math> at odd <math>t</math>'s and between <math>CDCD...</math> versus <math>DDDD...</math> at even <math>t</math>'s. | ||

| + | |||

| + | In a population of <math>\alpha GRIM</math> and <math>(1-\alpha) TatForTit</math>, the reciprocity of <math>GRIM</math> is <math>\alpha +(1-\alpha) ( 1-\delta) =1-\delta +\alpha \delta </math>; in the latter interaction, <math>GRIM</math> only alters its behaviour in response to a change at <math>t=1</math>. So for not too large <math>\alpha</math> - implying that there is enough <math>TatForTit</math> to have a noticeable effect of the punishment being triggered - and not too small <math>\delta</math> <math>GRIM</math> now is actually less reciprocal than <math>TitForTat</math>. | ||

| + | |||

| + | Dependence on the population a strategy finds itself in can be seen as a good or a bad thing. For picking up indirect invasions, it seems to be a good thing; changes ''far off'' the current path of play between strategies do not change this reciprocity measure; it only changes if a strategy mutates into one that reacts differently to a one-step deviation. This implies that only becoming more or becoming less reciprocal in the way that is relevant for indirect invasions is picked up. On the other hand, it does not lend itself for a general, environment-independent statement of how reciprocal or cooperative a strategy is. | ||

| + | |||

| + | |||

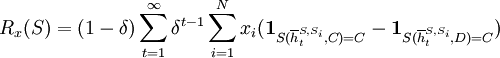

| + | Another possibility is to only look at the actions directly after a one-step deviation. Then we would get | ||

| + | |||

| + | <math>R_{x}(S) =(1-\delta) \sum_{t=1}^{\infty}\delta ^{t-1} \sum_{i=1}^{N}x_{i}(\mathbf{1}_{{S(\overline{h}_{t}^{S,S_{i}},C) =C} }-\mathbf{1}_{{ S(\overline{h}_{t}^{S,S_{i}},D) =C} })</math> | ||

| + | |||

| + | Here the reciprocity of <math>AllC</math> is <math>0</math> again. The reciprocity of both <math>TFT</math> and <math>GRIM</math> in a population of <math>TFT</math>, <math>GRIM</math> and <math>AllC</math> is <math>1</math> here. | ||

Current revision as of 14:00, 29 April 2010

Here we formally define measures for cooperation and reciprocity.

Contents |

Introduction

There are two reasons why we would like to have measures for cooperativeness and reciprocity. The first and most important reason is obvious; we would simply like to know how cooperative, and how reciprocal, strategies are during a run. The second reason is that these measures may serve as an indication of indirect invasions. Typically an indirect invasion is characterized by a change in reciprocity followed by a change in cooperativeness.

Measuring cooperation

Any measure of cooperativeness will have to weigh the different histories, and as we will see, every choice how to weigh them has appealing properties and drawbacks. In contrast to the definitions earlier, here it is more natural to look at histories that only reflect what actions the other player has played. This captures all relevant histories for the measurement of cooperativeness, because what a strategy S itself has played is uniquely determined by the history of actions by the other player.

Again, we will sometimes also write  for a history

for a history  , and we get the following sets of possible histories at time t.

, and we get the following sets of possible histories at time t.

With the repeated prisonners dilemma we have A = C,D, so in that case there are 2t − 1 histories

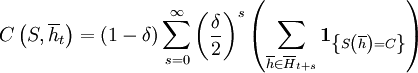

We begin with a measure that tells us how cooperative a strategy is, given that it is facing a history  . If we weigh a history at time t + s with the probability that the game actually reaches round t + s − 1, given that it has already reached round t − 1, and if we also divide by the number of different histories of length t + s − 1, under the restriction that the first t − 1 rounds of these histories are given by

. If we weigh a history at time t + s with the probability that the game actually reaches round t + s − 1, given that it has already reached round t − 1, and if we also divide by the number of different histories of length t + s − 1, under the restriction that the first t − 1 rounds of these histories are given by  , we get the following. Note that this measure does not depend on the

environment a strategy is in.

, we get the following. Note that this measure does not depend on the

environment a strategy is in.

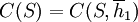

The overall cooperativeness of a strategy S can then be defined as the cooperativeness at the beginning, where we have the empty history;  .

.

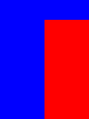

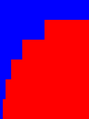

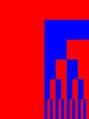

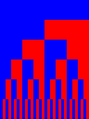

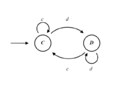

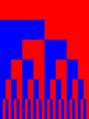

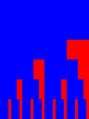

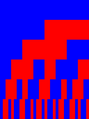

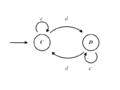

Graphic interpretation

An intuition for what this measure does can be gained from the following figure. The top bar represents the empty history. The second bar represents histories of length 1, and is split in two; the history where the other has cooperated, and the one where the other has defected. The third bar represents histories of lenth 2, and is split in four; the histories CC, CD, DC and DD. This continues indefinitely, but for the pictures we restrict ourselves to histories of length 5 or less. If a part is blue, then that means that the strategy reacts to this history with cooperation, if it is red, then the strategy reacts with defection. Cooperativeness weighs the blueness of those pictures.

Measure of cooperation for well-known strategies

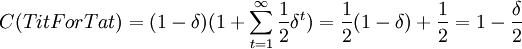

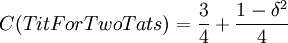

It is obvious that C(AllC) = 1 and that C(AllD) = 0. A strategy that starts with cooperation, and further conditions play on the first move entirely has cooperativeness  . This is sensible; if δ = 0, then the first move is the

only move, and since this strategy cooperates on the first move, it should have cooperativeness measure 1. On the other hand, except for the first move, this strategy cooperates in exactly half of the histories of length t for all t > 1. Therefore it makes sense that if δ goes to 1, then cooperativeness goes to

. This is sensible; if δ = 0, then the first move is the

only move, and since this strategy cooperates on the first move, it should have cooperativeness measure 1. On the other hand, except for the first move, this strategy cooperates in exactly half of the histories of length t for all t > 1. Therefore it makes sense that if δ goes to 1, then cooperativeness goes to  .

.

For tit for tat, we have:

More simple computations show that:

, and

, and

.

.

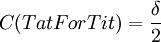

The last simple computation shows that

,

,

which is 1 at δ = 0 for similar reasons, and goes to 0 if δ goes to 1.

Measuring reciprocity

A measure for reciprocity can be constructed by comparing how much the cooperativeness of strategy S is changed if its opponent plays D rather than C. Again, histories of the same length are weighted equally here.

![R(S) =\sum_{t=1}^{\infty} \sum_{\overline{h}\in \overline{H}_{t}} (\frac{\delta }{2}) ^{t-1} [ C(S,(\overline{h},C)) -C(S,(\overline{h},D))]](/wiki/images/math/1/0/a/10aa4ff70d00b80e33decb85f43278c8.png)

In the figure above, this is visualized as the difference in blueness below two neighbouring bits that share their history up to the one before last period.

Measure of reciprocity for well-known strategies

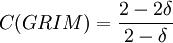

Simple calculations now give that R(AllC) = R(AllD) = 0 and R(TFT) = 1. Also, if we look at a strategy that only conditions on the first move and that defects forever if the first move of the other was D, and cooperates forever if the first move of the other was C, this strategy also has reciprocity 1. It is not hard to see that − 1 and + 1 are in fact the lower and upper bounds for reciprocity with this equal weighing of all strategies of the same length.

Likewise,

![R(GRIM) =\sum_{t=1}^{\infty }(\frac{\delta }{2}) ^{t-1}[ \frac{2-2\delta }{2-\delta }-0] =\frac{4(1-\delta) }{(2-\delta) ^{2}} =\frac{4( 1-\delta) }{( 2-\delta) ^{2}}](/wiki/images/math/c/b/4/cb436739af0771c8faadb16705cb54f6.png) .

.

![R(TFT)=\sum_{t=1}^{\infty} \sum ( \frac{\delta }{2}) ^{t-1} [\frac{1}{2}+\frac{1}{2}( 1-\delta) -( \frac{1}{2}-\frac{1}{2}( 1-\delta) )] 2^{t-1}=\sum_{t=1}^{\infty }\delta ^{t-1}(1-\delta) =1](/wiki/images/math/7/e/9/7e94653451ba07989ac4302b2758d47c.png)

Note that here the reciprocity of Grim Trigger is lower than that of TFT, which is due to the fact that for many histories GRIM will only play a sequence of D's either way.

Density-dependent measures

Alternatively we could measure the cooperativeness and reciprocity of a strategy given the population it is in. In that case, we should not weight all histories of a given length equally, but in the proportions in which they do occur given the actual population of strategies. Hence the weight (1 / 2)t − 1, which is one divided by the number of strategies in Ht, will then be replaced by their actual proportions. For instance, in a population that consists of any mixture of AllC, TFT and GRIM, the only history at time t that occurs, is a sequences of t − 1 consecutive C's. The measure for cooperativeness then simply becomes the expected times a strategy plays C divided by the expected number of rounds.

Suppose the population is given by a vector of frequencies x = [x1,...,xN] where xi is the frequency of strategy Si. Then we define the population-dependent cooperativeness of a strategy S as follows:

In a population with only AllC, TFT and GRIM, cooperativeness of all these three strategies is 1. In an infinitely large population of αTFT and (1 − α)AllD, cooperativeness of TFT is 1 − δ + δα .

A reasonable way of measuring reciprocity is to compare actual histories with histories that would have unfolded after one-step deviations. So let  be the history that for the first t − 1 steps unfolds recurcively between strategy S and T - as above;

be the history that for the first t − 1 steps unfolds recurcively between strategy S and T - as above;

and the recursion step

.

.

Only at time t the opponent plays a, while strategy S does not deviate and plays  ;

;

.

.

After that we go back to the normal recursion step

If  , then this is

, then this is  is just the actual history

is just the actual history  , but if

, but if  , it is a counterfactual history after a one-step deviation. The history

, it is a counterfactual history after a one-step deviation. The history  , as above, just gives the actions of player T and ignores those of player S.

, as above, just gives the actions of player T and ignores those of player S.

With this definition, we can make a measure for reciprocity as follows:

In a population of GRIM only, it is relatively easy to see that the reciprocity of GRIM is 1; the path of play between GRIM and itself is just a sequence of C's, while after a deviation GRIM just plays a sequence of D's. So at any time t, the discounted difference between these sequence from then on, normalised by multiplying by one of the t(1 − δ)'s, is 1. So discounting over t and normalizing by the other (1 − δ) gives a reciprocity measure of 1.

In a population of TFT only, the reciprocity of TFT is  . Again, the path of play between TFT and itself is a sequence of C's, but now, on the path after any deviation, TFT plays a sequence of alternating D's and C's. So at any time t, the discounted and normalized difference between them is

. Again, the path of play between TFT and itself is a sequence of C's, but now, on the path after any deviation, TFT plays a sequence of alternating D's and C's. So at any time t, the discounted and normalized difference between them is  . So discounting over t and normalizing by the other (1 − δ) gives the same number.

. So discounting over t and normalizing by the other (1 − δ) gives the same number.

This comparison is in line with what we might want from a reciprocity measure that depends on the actual population; at any stage, the threat that grim trigger poses to itself is maximal, and larger than the threat TFT poses to itself.

Another comparison shows that this measure also picks up the fact that, if the punishment in Grim Trigger is in fact triggered on the path of play, then on the remainder of the path, GRIM is actually not reciprocal at all anymore. With TFT on the other hand, reciprocity actually remains the same, whether the punishment has been triggered in the past or not. This is reflected in the following example.

In a population of αTitForTat and (1 − α)TatForTit, the reciprocity of TitForTat is  ; the reciprocity of TitForTat against itself was computed above, and for the computation of TitForTat against TatForTit it is enough to realize that the comparison between actual and counterfactual is between DCDC... versus CCCC... at odd t's and between CDCD... versus DDDD... at even t's.

; the reciprocity of TitForTat against itself was computed above, and for the computation of TitForTat against TatForTit it is enough to realize that the comparison between actual and counterfactual is between DCDC... versus CCCC... at odd t's and between CDCD... versus DDDD... at even t's.

In a population of αGRIM and (1 − α)TatForTit, the reciprocity of GRIM is α + (1 − α)(1 − δ) = 1 − δ + αδ; in the latter interaction, GRIM only alters its behaviour in response to a change at t = 1. So for not too large α - implying that there is enough TatForTit to have a noticeable effect of the punishment being triggered - and not too small δ GRIM now is actually less reciprocal than TitForTat.

Dependence on the population a strategy finds itself in can be seen as a good or a bad thing. For picking up indirect invasions, it seems to be a good thing; changes far off the current path of play between strategies do not change this reciprocity measure; it only changes if a strategy mutates into one that reacts differently to a one-step deviation. This implies that only becoming more or becoming less reciprocal in the way that is relevant for indirect invasions is picked up. On the other hand, it does not lend itself for a general, environment-independent statement of how reciprocal or cooperative a strategy is.

Another possibility is to only look at the actions directly after a one-step deviation. Then we would get

Here the reciprocity of AllC is 0 again. The reciprocity of both TFT and GRIM in a population of TFT, GRIM and AllC is 1 here.